Entropy

Definition

Entropy, denoted , is a key metric in BayesiaLab for measuring the uncertainty associated with the probability distribution of a variable .

Entropy is expressed in bits and defined as follows:

The Entropy of a variable can also be understood as the sum of the Expected Log-Losses of its states.

Example

Let’s assume we have four containers, A through D, which are filled with balls that can be either blue or red.

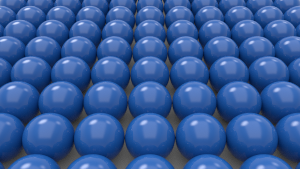

- Container A is filled exclusively with blue balls.

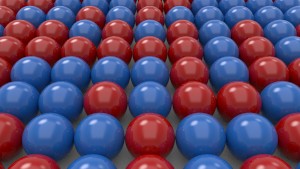

- Container B has an equal amount of red and blue balls.

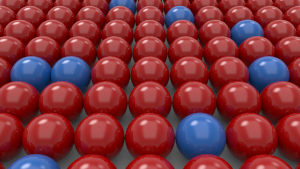

- In Container C, 10.89% of all balls are blue, and the remainder is red.

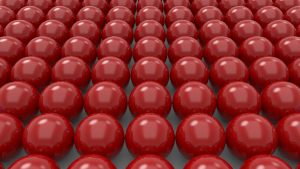

- Container D only holds red balls.

- Within each container, the order of balls is entirely random.

- A volunteer who already knows the proportions of red and blue balls in each container now randomly draws one ball from each container. What is his degree of uncertainty regarding the ball color at the moment of each draw?

- Needless to say, with Containers A and D, there is no uncertainty at all. From Containers A and D, he will draw a blue and red ball, respectively, with perfect certainty. What about the degree of certainty or, rather, uncertainty for Containers B and C?

- The concept of Entropy can formally represent the degree of uncertainty.

- We use the binary variable to represent the color of the ball.

- Using the definition of Entropy from above, we can compute the Entropy value applicable to each draw.

| Container A | Container B | Container C | Container D |

|---|---|---|---|

Loading SVG... | Loading SVG... | Loading SVG... | Loading SVG... |

|  |  |  |

We can also plot Entropy as a function of the probability of drawing a red ball.

We see that Entropy reaches its maximum value for , i.e., when drawing a red or a blue ball is equally probable. A 50/50 mix of red and blue balls is indeed the situation with the highest possible degree of uncertainty.

Maximum Entropy as a Function of the Number of States

This was an example of a variable with two states only. As we introduce more possible states, e.g., another ball color, the maximum possible Entropy increases.

More specifically, the maximum value of Entropy increases logarithmically with the number of states of node .

where is the number of states of the variable .

As a result, one cannot compare the Entropy values of variables with different numbers of states.

To make Entropy comparable, the Normalized Entropy metric is available, which takes into account the Maximum Entropy.