Symmetric Normalized Mutual Information

Context

The following Venn Diagram illustrates that the Mutual Information is symmetrical for the two variables and , i.e., .

However, the variables and can each have a different number of states. Therefore, their respective entropies can be very different.

This means that the absolute value of Mutual Information cannot be interpreted without context. In the Venn Diagram, for instance, reduces by a bigger percentage than does . As such, would be more “important” with regard to than it would be with regard to .

Definition

The Symmetric Normalized Mutual Information measure takes the difference of the respective entropies of and into account:

As a result, we have an easy-to-interpret measure that relates to both and together.

Usage

For a given network, BayesiaLab can report the Symmetric Normalized Mutual Information in several contexts:

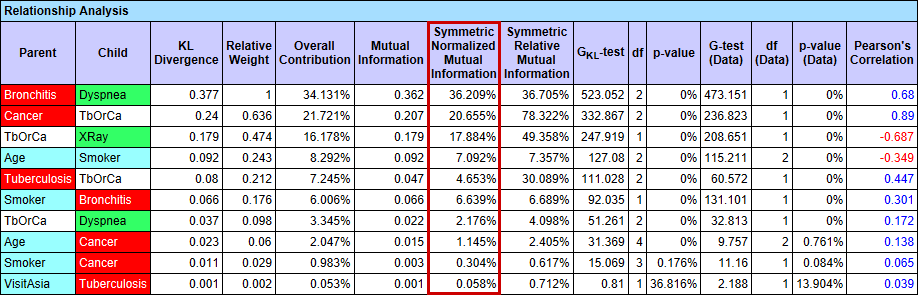

Select Menu > Analysis > Report > Relationship Analysis:

The Symmetric Normalized Mutual Information can also be shown by selecting Menu > Analysis > Visual > Overall > Arc > Mutual Information and then clicking the Show Arc Comments icon or selecting Menu > View > Show Arc Comments.

Note that the corresponding options under Menu > Preferences > Analysis > Visual Analysis > Arc's Mutual Information Analysis have to be selected first:

In Preferences, Child refers to the Relative Mutual Information from the Parent onto the Child node, i.e., in the direction of the arc.

Conversely, Parent refers to the Relative Mutual Information from the Child onto the Parent node, i.e., in the opposite direction of the arc.