Introductory BayesiaLab Course

A World-Class Introduction to Bayesian Networks

Since 2009, our BayesiaLab courses and events have spanned the globe, from New York to Sydney, Paris to Singapore, bringing the power of Bayesian networks to researchers and professionals across disciplines.

This course goes far beyond a beginner’s guide. It offers a comprehensive, hands-on introduction to Bayesian networks and their applications in marketing science, econometrics, ecology, sociology, and more. Each theoretical concept is paired with a practical BayesiaLab exercise, helping you immediately apply your new knowledge to real-world challenges in knowledge modeling, causal inference, and machine learning.

More than 2,000 researchers worldwide have taken this course in person, and many now consider Bayesian networks and BayesiaLab essential to their work. Don’t just take our word for it—see what participants are saying in the testimonials.

Self-Study Edition

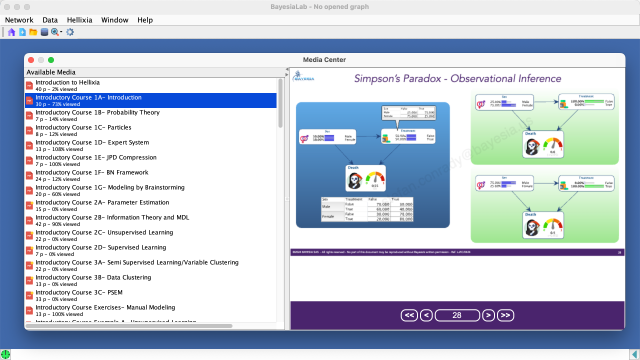

Now, you can access the same world-class training from anywhere at any time with the pre-recorded self-study edition of the three-day Introductory BayesiaLab Course, which includes:

- 60 days of access to a recorded version of a recent three-day classroom course.

- All course materials, including over 24 hours of lecture videos and software demonstrations, plus hundreds of presentation slides, datasets, and Bayesian network examples.

- A 60-day license to BayesiaLab (Education Edition), which allows you to follow the course and implement all teaching examples on your own, at your own pace.

- The BayesiaLab software installed on your computer is also the delivery mechanism for all the course materials via its integrated Learning/Media Center. You simply open BayesiaLab and have all elements of the course at your fingertips.

Start Learning Today

And, there’s no need to wait. You can start learning right now and then join the community of thousands of BayesiaLab users around the world.

The Course Content in Detail

Here are all the topics that are included in your BayesiaLab course program. The structure of the recorded program mirrors the highly acclaimed in-person course, which has been refined over many years of teaching researchers and practitioners all over the world.

Day 1 — Theoretical Introduction

Introduction

- Bayesian Networks: Artificial Intelligence for Decision Support under Uncertainty

- Probabilistic Expert Systems

- A Map of Analytic Modeling and Reasoning

- Bayesian Networks and Cognitive Science

- Unstructured and Structured Particles Describing the Domain

- Expert-Based Modeling and/or Machine Learning

- Predictive vs. Explanatory Models, i.e., Association vs. Causation

- Examples:

- Medical Expert Systems

- Stock Market Analysis

- Microarray Analysis

- Consumer Segmentation

- Driver Analysis

- Product Optimization

Examples of Probabilistic Reasoning

- Cognitive Science: How our probabilistic brain uses priors for the interpretation of images

- Interpreting Results of Medical Tests

- Kahneman & Tversky’s Yellow Cab/White Cab Example

- The Monty Hall Problem — Solving a Vexing Puzzle with a Bayesian network

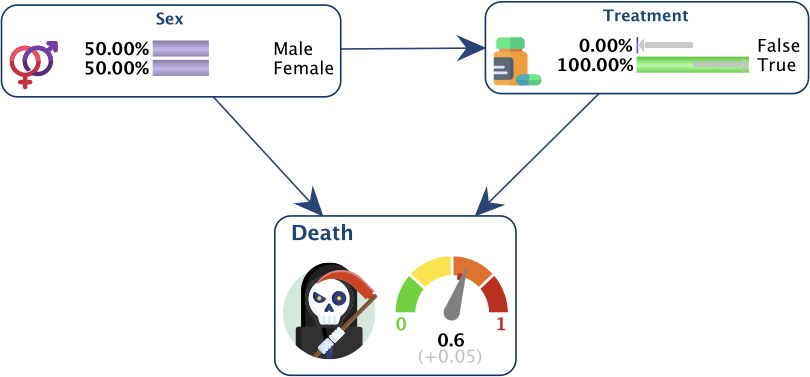

- Simpson’s Paradox — Observational Inference vs Causal Inference

Probability Theory

- Probabilistic Axioms

- Perception of Particles

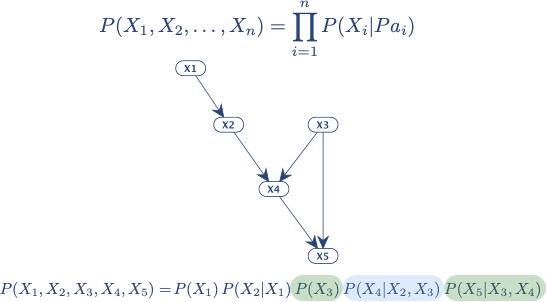

- Joint Probability Distribution (JPD)

- Probabilistic Expert System for Decision Support: Types of Requests

- Leveraging Independence Properties

- Product/Chain Rule for Compact Representation of JPD

Bayesian Networks

- Qualitative Part: Directed Acyclic Graph

- Graph Terminology

- Graphical Properties

- D-Separation

- Markov Blanket

- Quantitative Part: Marginal and Conditional Probability Distributions

- Exact and Approximate Inference in Bayesian networks

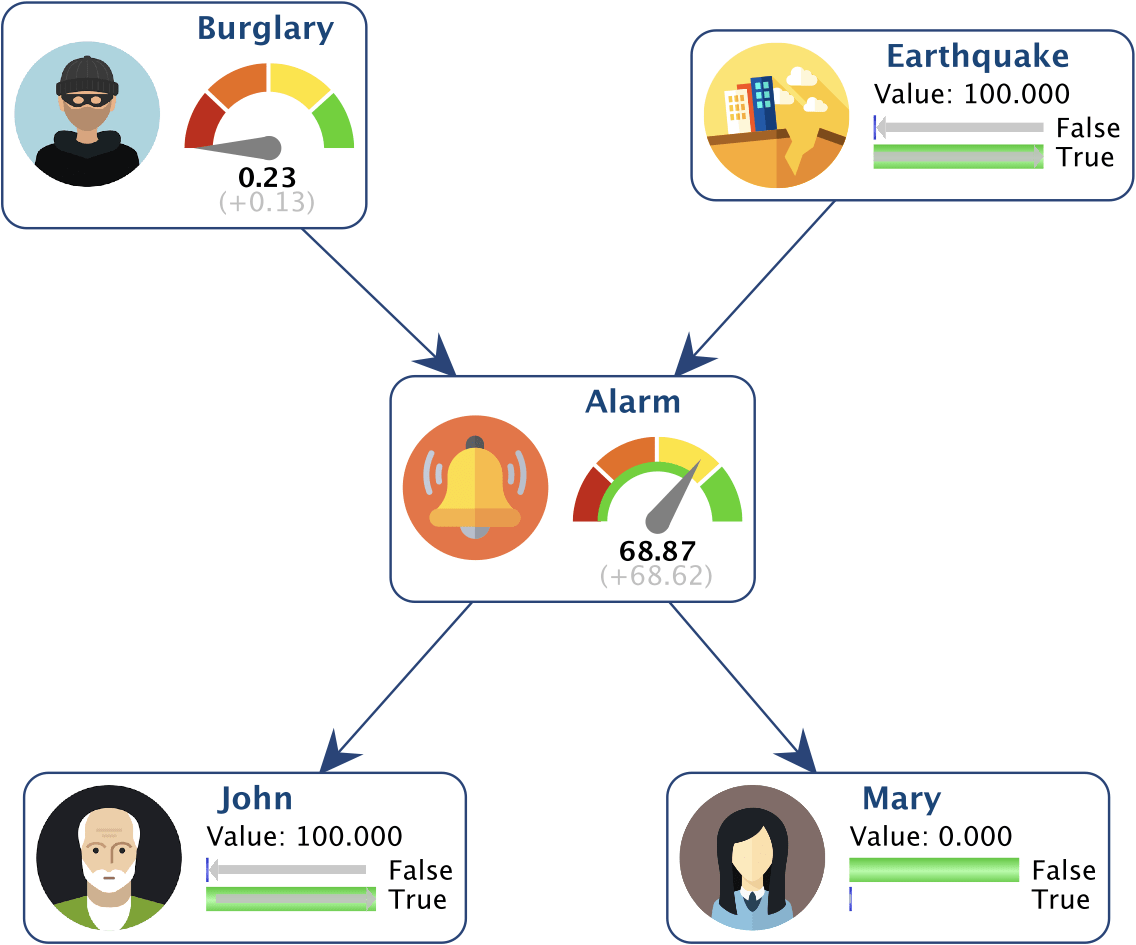

- Example of Probabilistic Inference: Alarm System

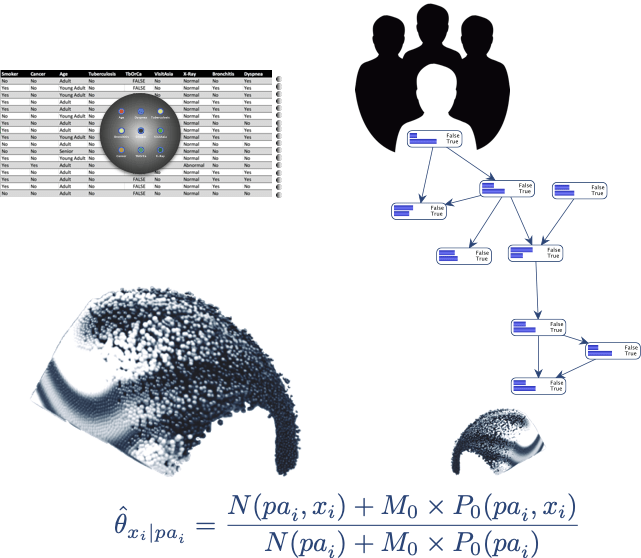

Building Bayesian Networks Manually

- Expert-Based Modeling via Brainstorming

- Why Expert-Based Modeling?

- Value of Expert-Based Modeling

- Structural Modeling: Bottom-Up and Top-Down Approaches

- Parametric Modeling

- Cognitive Biases

- BEKEE: Bayesia Expert Knowledge Elicitation Environment

Day 2: Machine-Learning — Part 1

Parameter Estimation

- Maximum Likelihood Estimation

- Bayesian Parameter Estimation with Dirichlet Priors

- Smooth Probability Estimation (Laplacian Correction)

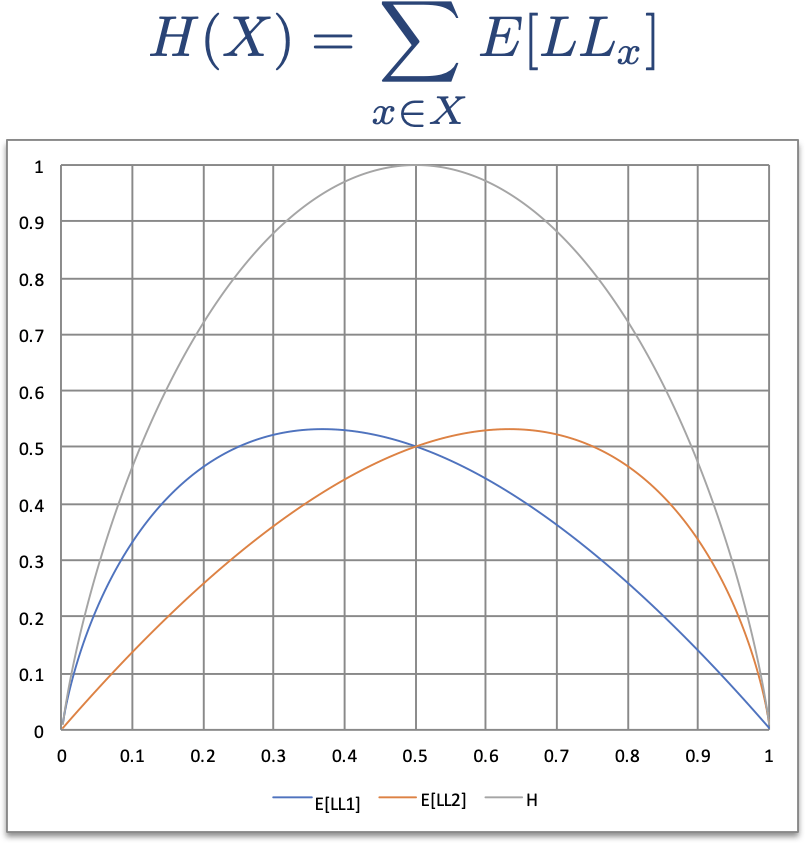

Information Theory

- Information as a Measurable Quantity: Log-Loss

- Expected Log-Loss

- Entropy

- Conditional Entropy

- Mutual Information

- Symmetric Relative Mutual Information

- Kullback-Leibler Divergence

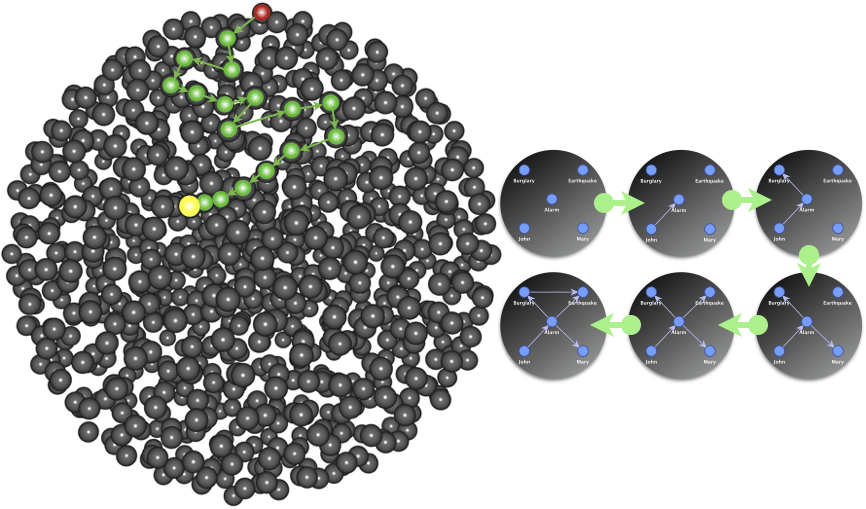

Unsupervised Structural Learning

- Entropy Optimization

- Minimum Description Length (MDL) Score

- Structural Coefficient

- Minimum Size of Data Set

- Search Spaces

- Search Strategies

- Learning Algorithms

- Maximum Weight Spanning Tree

- Taboo Search

- EQ

- TabooEQ

- SopLEQ

- Taboo Order

- Data Perturbation

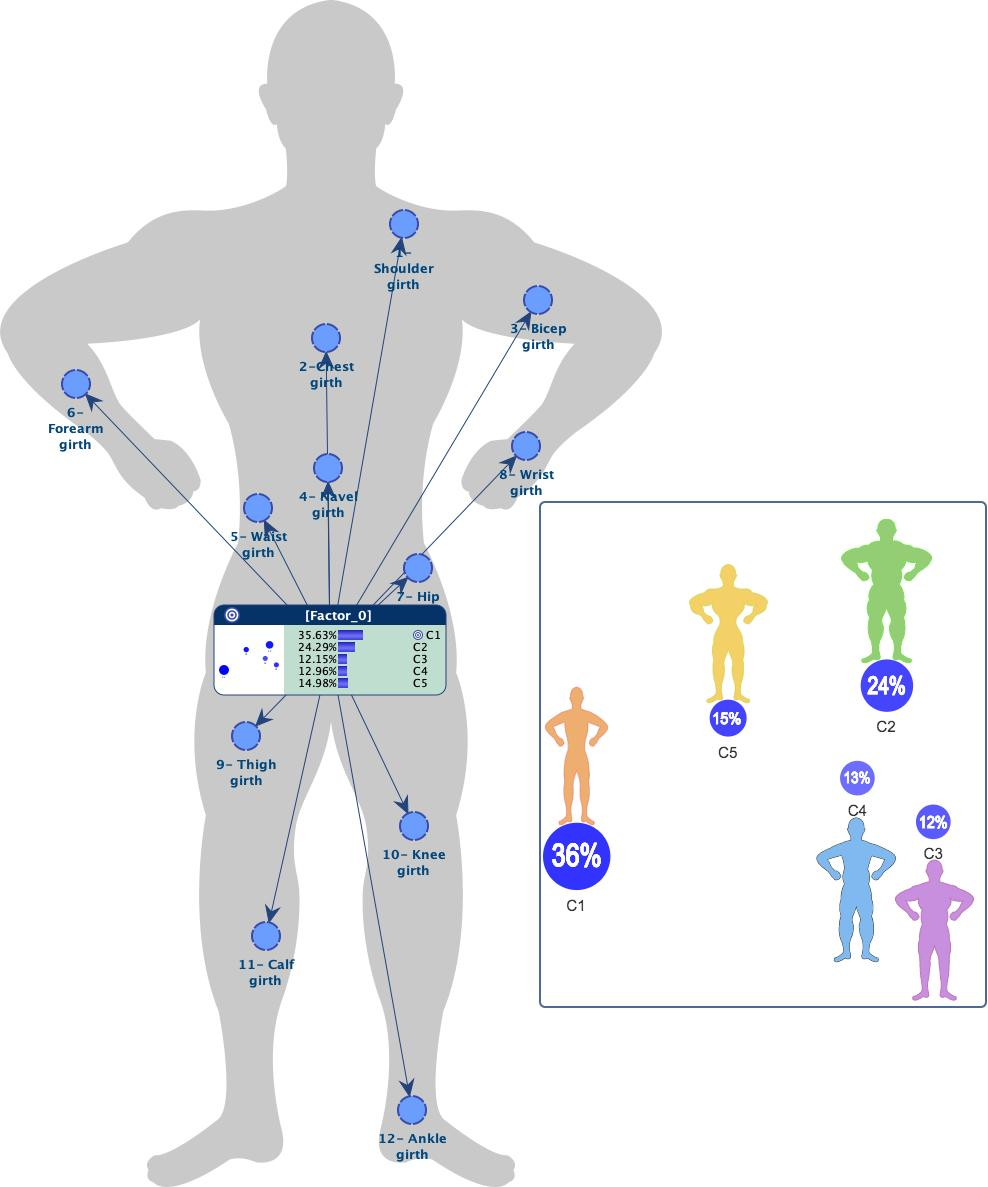

- Example: Exploring the relationships in body dimensions

- Data Import (Typing, Discretization)

- Definition of Classes

- Excluding a Node

- Heuristic Search Algorithms

- Data Perturbation (Learning, Bootstrap)

- Choosing the Structural Coefficient

- Console

- Symmetric Layout

- Model Analysis: Arc Force, Node Force, and Pearson Coefficient

- Dictionary of Node Positions

- Adding a Background Image

Supervised Learning

- Learning Algorithms

- Naive

- Augmented Naive

- Manual Augmented Naive

- Tree-Augmented Naive

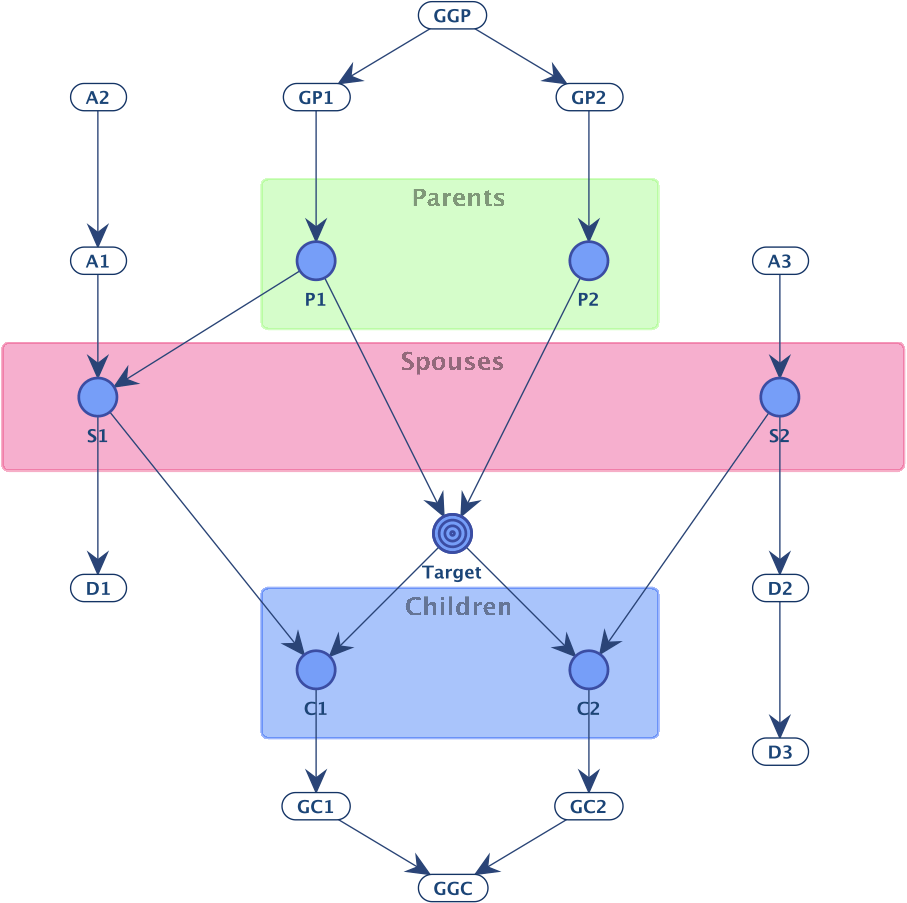

- Sons & Spouses

- Markov Blanket

- Augmented Markov Blanket

- Minimal Augmented Markov Blanket

- Variable Selection with the Markov Blanket

- Example: Predictions Based on Body Dimensions

- Data Import (Data Type, Supervised Discretization)

- Heuristic Search Algorithms

- Target Evaluation (In-Sample, Out-of-Sample: K-Fold, Test Set)

- Smoothed Probability Estimation

- Analysis of the Model (Monitors, Mapping, Target Report, Target Posterior Probabilities, Target Interpretation Tree)

- Evidence Scenario File

- Automatic Evidence-Setting

- Adaptive Questionnaire

- Batch Labeling

Day 3: Machine-Learning — Part 2

Semi-Supervised Learning - Variable Clustering

- Algorithms

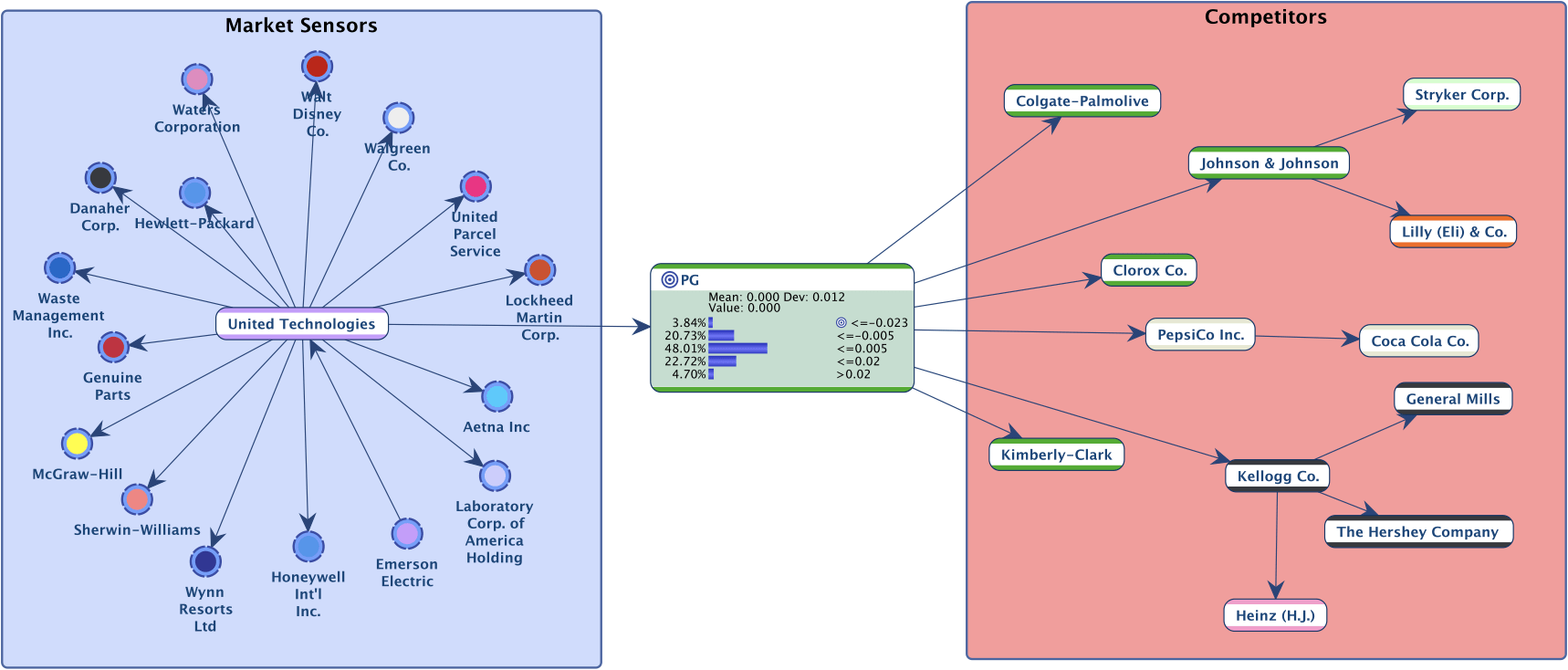

- Example: S&P 500 Analysis

- Variable Clustering

- Changing the number of Clusters

- Dynamic Dendrogram

- Dynamic Mapping

- Manual Modification of Clusters

- Manual Creation of Clusters

- Semi-Supervised Learning

- Search Tool (Nodes, Arcs, Monitors, Actions)

- Sticky Notes

- Variable Clustering

Data Clustering

- Synthesis of a Latent Variable

- Expectation-Maximization Algorithm

- Ordered Numerical Values

- Cluster Purity

- Cluster Mapping

- Log-Loss and the Entropy of Data

- Contingency Table Fit

- Hypercube Cells Per State

- Example: Segmentation of Men Based on Body Dimensions

- Data Clustering (Equal Frequency Discretization, Meta-Clustering)

- Quality Metrics (Purity, Log-Loss, Contingency Table Fit)

- Posterior Mean Analysis (Mean, Delta-Means, Radar charts)

- Mapping

- Cluster Interpretation with Target Dynamic Profile

- Cluster Interpretation with Target Optimization Tree

- Projection of the Cluster on other Variables

Probabilistic Structural Equation Models

- PSEM Workflow

- Unsupervised Structural Learning

- Variable Clustering

- Multiple Clustering for Creating a Factor Variable (via data Clustering) per Cluster of Manifest Variables

- Unsupervised Learning for Representing the Relationships between the Factors and the Target Variable

- Example: The French Market of Perfumes

- Cross-Validation of the Clusters of Variables

- Display of Classes

- Total Effects

- Direct Effects

- Direct Effect Contributions

- Tornado Analysis

- Taboo, EQ, TabooEQ, and Arc Constraints

- Multi-Quadrant Analysis

- Exporting Variations

- Target Optimization (Dynamic Profile)

- Target Optimization (Tree)

About the Instructor

Dr. Lionel Jouffe is co-founder and CEO of France-based Bayesia S.A.S. Lionel holds a Ph.D. in Computer Science from the University of Rennes and has worked in Artificial Intelligence since the early 1990s. While working as a Professor/Researcher at ESIEA, Lionel started exploring the potential of Bayesian networks. After co-founding Bayesia in 2001, he and his team have been working full-time on the development of BayesiaLab. Since then, BayesiaLab has emerged as the leading software package for knowledge discovery, data mining, and knowledge modeling using Bayesian networks. It enjoys broad acceptance in academic communities, business, and industry.

FAQ

Who should sign up for this course?

Applied researchers, statisticians, data scientists, data miners, decision scientists, biologists, ecologists, environmental scientists, epidemiologists, predictive modelers, econometricians, economists, market researchers, knowledge managers, marketing scientists, operations researchers, social scientists, students and teachers in related fields.

What background is required?

- Basic data manipulation skills, e.g., with Excel.

- No prior knowledge of Bayesian networks is required.

- No programming skills are required. You will use the graphical user interface of BayesiaLab for all exercises.

- For a general overview of this field of study, we suggest you read our free e-book, Bayesian Networks & BayesiaLab. Although not mandatory, reading its first three chapters would be an excellent preparation for the course.